Google’s A2A and Model Context Protocol (MCP): The Future REST APIs of Machine Learning Development Services

In today’s rapidly advancing AI ecosystem, the need for interoperability, scalability, and efficiency is more critical than ever. The days of manually building complex REST APIs for each model deployment are numbered. Google’s introduction of App-to-App (A2A) communication and the Model Context Protocol (MCP) is set to redefine how businesses build and integrate machine learning development services.

As someone who closely follows the evolution of AI and ML systems, I believe that these advancements mark a pivotal shift in how we build and deploy smart, connected applications. For companies offering ai ml development services, understanding these protocols isn’t just an option—it’s a necessity.

What Are A2A and MCP?

Let’s start with the basics.

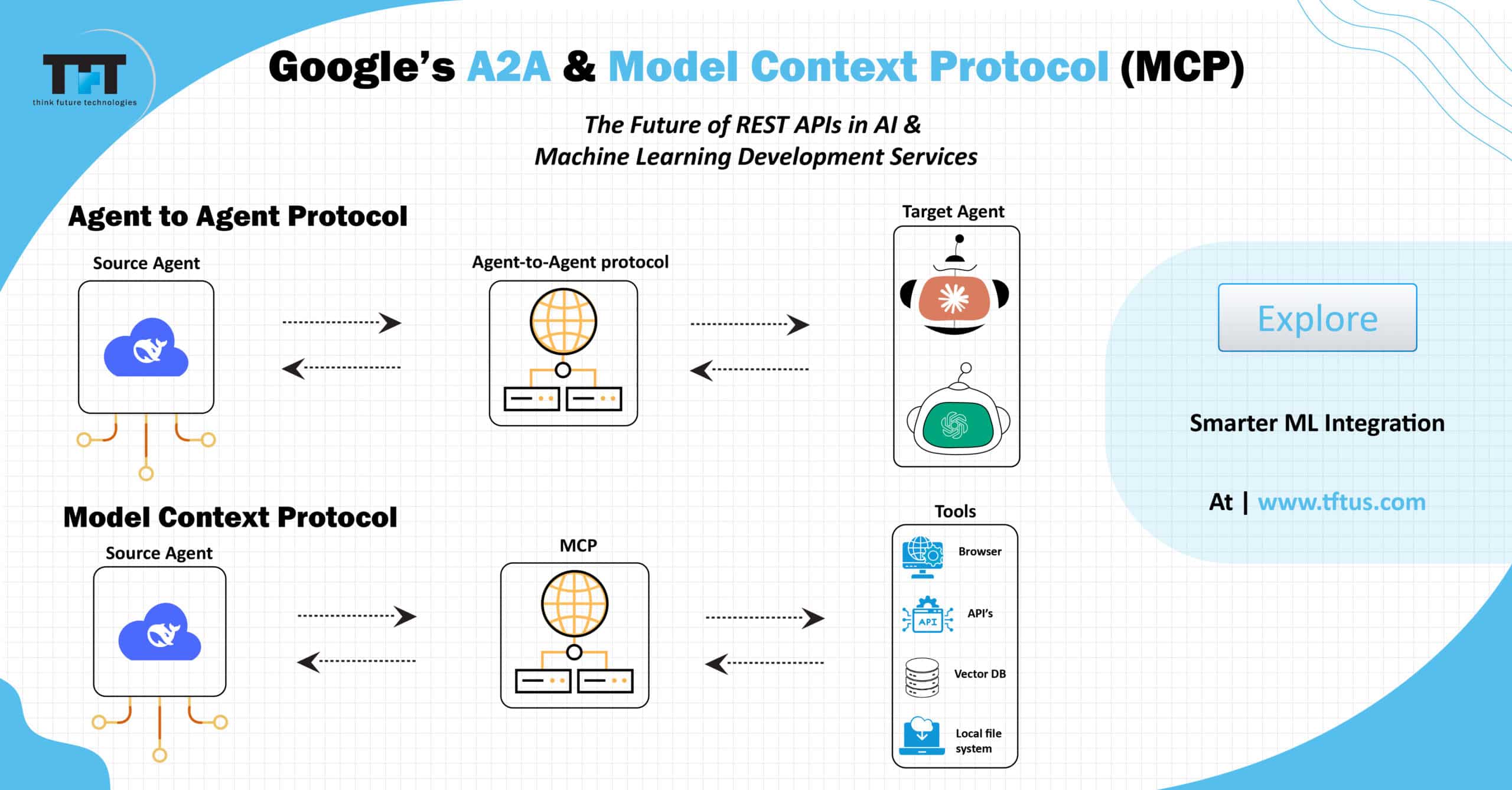

A2A (App-to-App) is Google’s new framework designed to simplify the way applications communicate with one another, especially when machine learning models are involved. Instead of relying on manually constructed REST APIs, applications can now expose their models and capabilities in a standard format—allowing other apps to automatically discover and use them.

Complementing this is MCP (Model Context Protocol). MCP ensures that any interaction with a machine learning model carries the right context—such as task intent, input/output schema, environment variables, and versioning. It’s like OpenAPI, but specifically for ai and ml development services.

Together, A2A and MCP form a backbone for more intelligent and interoperable systems.

Why It Matters for Machine Learning Development Services

If you’re offering machine learning app development services, this innovation changes the way your solutions are designed from the ground up. Traditionally, deploying a model means:

-

Setting up infrastructure

-

Creating custom endpoints

-

Managing API authentication and documentation

-

Handling model versioning manually

With A2A and MCP, these steps are streamlined or even eliminated:

-

Apps become self-describing endpoints

-

Models are discoverable and reusable

-

Contextual information is baked into every call

-

You can seamlessly integrate across multi-app ecosystems

For machine learning services companies, this means faster deployment, better scalability, and lower maintenance overhead.

Use Cases and Applications

Here’s how this could play out in real-world scenarios:

1. Retail Recommendation Engines

A mobile shopping app can now discover and use a centralized recommendation model from a data science team without needing to build an API from scratch.

2. Healthcare Diagnostics

A clinical imaging tool can invoke a diagnostic model via A2A, embedding patient metadata and use context through MCP. This reduces errors and ensures compliance.

3. Financial Forecasting

Finance platforms can dynamically select and run the latest forecasting models, complete with contextual indicators like market conditions or region, using MCP.

These examples show how ai and machine learning development services can evolve from isolated components to interconnected ecosystems.

How This Impacts AI/ML Development Services

For those of us delivering ai/ml development services, Google’s A2A and MCP offer a new set of building blocks. Here’s what changes:

-

No more manual API creation: Spend more time refining models, less time deploying them.

-

Standardized integration: Whether it’s a web app, mobile app, or another ML service, integrations are easier and more robust.

-

Enhanced modularity: You can build once, deploy everywhere—across different applications or teams.

-

Greater transparency and documentation: MCP provides built-in schemas that clarify expected inputs and outputs.

This isn’t just a convenience upgrade—it’s a strategic advantage.

Why Enterprises Should Pay Attention

Enterprises embracing ai and machine learning development services are under constant pressure to innovate faster. Whether it’s automating internal workflows or delivering intelligent customer-facing products, time-to-market is everything.

With Google’s A2A and MCP:

-

You reduce technical friction between teams

-

Enable cross-platform intelligence sharing

-

Support governance and compliance through built-in context protocols

-

Increase the lifecycle value of every model

If your organization struggles with siloed AI initiatives, these protocols are your roadmap to integration and operational excellence.

Integration with Tools Like LangChain and Vertex AI

Leading-edge frameworks like LangChain are already leveraging similar concepts to allow agents to invoke models and tools dynamically. Meanwhile, Google’s Vertex AI is expected to fully support A2A and MCP protocols, making it easier to manage and scale models across the enterprise.

These integrations signal that A2A and MCP aren’t just theoretical—they’re the future of applied AI architecture.

Outbound Resource to Explore

To better understand how A2A and MCP fit into the broader AI ecosystem, I recommend reading this article by Google Cloud, which offers a technical breakdown and potential implementation strategies.

Conclusion: A Smarter, Context-Rich Future

Google’s A2A and Model Context Protocol (MCP) are not incremental upgrades. They represent a fundamental shift in how we approach machine learning development services. For professionals like myself—and teams delivering machine learning services companies rely on—it opens the door to more modular, discoverable, and scalable systems.

As the complexity of AI applications grows, adopting these protocols isn’t just beneficial—it’s imperative. They allow us to build systems that are intelligent by design, context-aware by default, and scalable by necessity.

If your business is looking to future-proof its AI infrastructure or enhance its ML offerings, now is the time to rethink how models are deployed, invoked, and integrated.

Ready to build smarter, scalable AI systems?

👉 Explore our AI/ML solutions at www.tftus.com

Recent Blogs

- RPA Software Solution: Transforming Business with Smarter Automation Tools

- Salesforce Implementation Services: Meet Your New AI Teammates

- Salesforce Services: How Modern Engineering Is Revolutionizing CRM Solutions

- Salesforce Implementation Services: AI-First Integration with Agentforce for Smarter API Documentation & Governance

- 5 Benefits for App Success: Hire Remote React Native Developers

Categories

- Agritech (1)

- AR/VR (5)

- Artificial Intelligence (55)

- Machine Learning (1)

- Blockchain (4)

- Business Intelligence (3)

- CRM (5)

- SalesForce (4)

- Data Engineering (8)

- Data protection (2)

- Development (102)

- Golang Development (15)

- Python Web Development (9)

- React JS (5)

- React native (10)

- Devops (3)

- Hire Developers (3)

- Internet of Things (IoT) (5)

- Kubernetes (2)

- Machine Learning (2)

- Mobile App Development (44)

- Node.js (7)

- outsourcing (7)

- Partnership (4)

- Performance Testing (3)

- RPA (21)

- Security (24)

- Strategy (1)

- Testing (110)

- Accessibility Testing (2)

- Automation Testing (22)

- Dynamic Testing (1)

- Manual Testing (3)

- Mobile App Testing (13)

- Offshore Software Testing (6)

- Penetration Testing Services (13)

- QA testing (16)

- Remote Software Testing (7)

- Software Testing (19)

- Website Design (22)